Microsoft has developed a new text-to-speech AI model called VALL-E, which is capable of closely mimicking a person’s voice with just a three-second audio sample. This model can generate audio of a person saying anything and do it in a way that attempts to preserve the speaker’s emotional tone.

Microsoft unveils VALL-E

Microsoft has announced a new text-to-speech AI model called VALL-E, which can closely mimic a person’s voice when given a three-second audio sample. Once it has learned a specific voice, it can generate audio of that person saying anything, and do it in a way that attempts to preserve the speaker’s emotional tone.

Microsoft trained VALL-E’s speech-synthesis capabilities on an audio library, assembled by Meta, called LibriLight which contains 60,000 hours of English language speech from more than 7,000 speakers, mostly pulled from LibriVox public domain audiobooks. And also can imitate the “acoustic environment” of the sample audio.

What is VALL-E

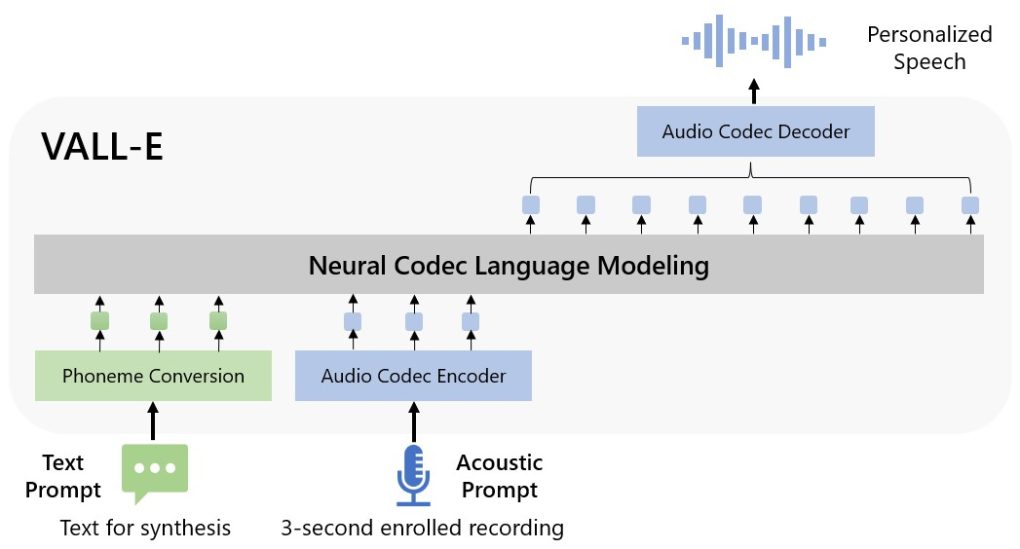

The VALL-E model is a neural codec language model, it builds off of a technology called EnCodec, which Microsoft announced in October 2022. Unlike other text-to-speech methods that typically synthesize speech by manipulating waveforms, VALL-E generates discrete audio codec codes from text and acoustic prompts. It basically analyzes how a person sounds, breaks that information into discrete components (called “tokens”) thanks to EnCodec, and uses training data to match what it “knows” about how that voice would sound if it spoke other phrases outside of the three-second sample.

Microsoft has not provided the code for VALL-E for others to experiment with, due to the potential social harm that this technology could cause. Misuse of this technology could potentially fuel mischief and deception, and Microsoft’s researchers seem to be aware of this potential risk. However, they also note that the technology could have positive applications in areas such as voice assistants, accessibility, and entertainment.

The VALL-E model is a powerful tool that has the potential to change how we interact with technology, with applications in areas such as voice assistants, accessibility, and entertainment. However, it also poses potential risks if misused, and Microsoft has not provided the code for others to experiment with. It’s important for researchers and developers to consider the ethical implications of this technology as it continues to advance.